This is an old revision of the document!

Set Constraints for Algebraic Datatype Inference

We want that our compiler detects correctly the possible subset of types for each function.

Example

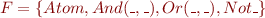

Using the following grammar :

type form = Atom of string

| And of form*form

| Or of form*form

| Not of form

we can have the following types :  which is an infinite set (“_” represents any kind of type).

which is an infinite set (“_” represents any kind of type).

But if we define the following function :

let rec elimOr(f : form) : form =

match f with

S1: | Atom s -> Atom s

S2: | And(f1,f2) -> And(elimOr f1, elimOr f2)

S3: | Or(f1,f2) -> Not(And(Not(elimOr f1), Not(elimOr f2)))

S4: | Not(f1) -> Not(elimOr f1)

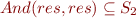

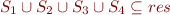

It is clear that the output types of this function is a subset of  (as the

(as the  is removed). In fact, if we define

is removed). In fact, if we define  as the resulting type of this function,

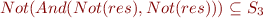

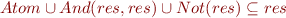

as the resulting type of this function,  can be computed by computing the fixpoint of :

can be computed by computing the fixpoint of :

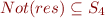

In this particular case, the fixpoint is :  which computes correctly the fact that the

which computes correctly the fact that the  has been removed.

has been removed.

- Type inference. Semantics of untyped lambda calculus.