Set Constraints for Algebraic Datatype Inference

We want that our compiler detects correctly the possible subset of types for each function.

Example

Consider data type definition

type form = Atom of string

| And of form * form

| Or of form * form

| Not of form

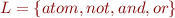

We represent values of this data type as ground terms in language  .

.

Define function:

let rec elimOr(f : form) : form =

match f with

S1: | Atom s -> Atom s

S2: | And(f1,f2) -> And(elimOr f1, elimOr f2)

S3: | Or(f1,f2) -> Not(And(Not(elimOr f1), Not(elimOr f2)))

S4: | Not(f1) -> Not(elimOr f1)

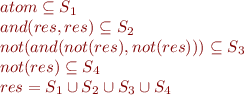

We infer the subset of formulas returned by this function using set constraints. Introduce set for each case of pattern matching expression. Let 'res' denote the set of values that can be returned by the function. We obtain the system of constraints:

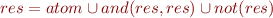

We can prove that the least fixpoint is the same as the least fixpoint of

the last expression is a description of the solution set. We can use it later on to prove that the result does not mention 'or' syntax tree node.

- Type inference. Semantics of untyped lambda calculus.