Lecture 7: Formulas as a unifying domain for static analysis

General setup

Analysis domain based on formulas and main operations:

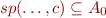

- set of all first-order formulas (or another set closed under propositional operations and quantifiers)

- set of all first-order formulas (or another set closed under propositional operations and quantifiers) - subset of formulas that we use as our analysis domain, typically finite. If we used abstract interpretation framework, we would have

- subset of formulas that we use as our analysis domain, typically finite. If we used abstract interpretation framework, we would have ![Math $\gamma(F)=\{st \mid [\![F]\!]st = \mbox{true}\}$](/w/lib/exe/fetch.php?media=wiki:latex:/img047babda30c65a6d2c41798f020da121.png)

- normalization function, ensuring that the result is in our domain, works as

- normalization function, ensuring that the result is in our domain, works as  but goes from formulas to formulas

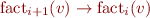

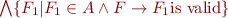

but goes from formulas to formulas- key requirement: the implication

must be a valid formula (

must be a valid formula ( produces a weaker formula)

produces a weaker formula)

- computes strongest postcondition on formulas precisely, e.g.

- computes strongest postcondition on formulas precisely, e.g.  . In some cases, we may want to eliminate the existential quantifier from the

. In some cases, we may want to eliminate the existential quantifier from the  formula, if for example

formula, if for example  allows the use of

allows the use of  and

and  , but

, but  doesn't. It really depends on what the analysis is about.

doesn't. It really depends on what the analysis is about.

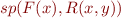

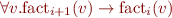

Note:  and not in

and not in  . We then should find a way to “put back” in

. We then should find a way to “put back” in  what we obtain from

what we obtain from  . The figure below should help understanding the transformations.

. The figure below should help understanding the transformations.  so we need the normalization function

so we need the normalization function  to push back

to push back  in the set

in the set  (

( ).

).

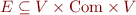

Control-flow graph is graph  where

where  are program points (between statements) and

are program points (between statements) and  are edges labelled with program commands.

are edges labelled with program commands.

Control Flow Analysis: Flow chart representation of a program. It is defined by some set of node ( ) and edges (

) and edges ( ).

).

- Example:

As we are analyzing the program and we don't have any initial knowledge about the context we are starting in, we make our first assumption as general as possible.

The state of our analysis (set of computed facts) maps program points to formulas in  , that is:

, that is:  .

.

Basic philosophy of the analysis: compute precise results whenever possible, then apply  to fit it into

to fit it into  .

.

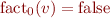

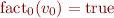

Initial state:  , except for initial program point

, except for initial program point  , where

, where  . This can be different if we know something about the initial program state. For instance, when analyzing Java programs, we could use the fact that primitive data types are initialized to default values.

. This can be different if we know something about the initial program state. For instance, when analyzing Java programs, we could use the fact that primitive data types are initialized to default values.

- intuition: computing abstraction of the set of reachable states

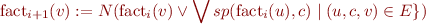

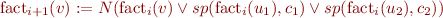

- Use the Update rule:

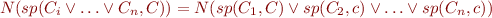

to compute the impact of all incoming edges

For example, if we have a state  reachable from two states

reachable from two states  and

and  through the commands

through the commands  and

and  respectively, we would have the following update rule for

respectively, we would have the following update rule for  :

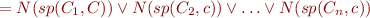

:

When we do an analysis we must consider two properties: Correctness (soundness) and Termination

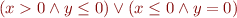

Correctness (soundness): if for each program point  for all

for all  , then the facts are true in all program executions and they correspond to loop invariant(s). For more information see correctness of formula propagation.

, then the facts are true in all program executions and they correspond to loop invariant(s). For more information see correctness of formula propagation.

(If we stopped the analysis before this happens, then the result is not correct, it does not take into account all executions).

Note: To prove correctness for the guarded command [ ] (non-deterministic choice) we can choose any path we like, the only aspect that matters is fairness (in the choice).

Termination:

- observe the sequence of formulas

for

for

- start from false, become weaker and weaker

- require that there are no infinite ascending chains of weaker and weaker formulas

Analysis stops when  . That is, no new states are generated by strongest postcondition (we have reached a fix point - formula not trivial).

. That is, no new states are generated by strongest postcondition (we have reached a fix point - formula not trivial).

Note: If you end up with a situation where  a state

a state  for which

for which  , then the analysis gives no useful information about state

, then the analysis gives no useful information about state  . In this case, you may want to refine

. In this case, you may want to refine  (and hence also change the function

(and hence also change the function  ). Sometimes, this can be done automatically.

). Sometimes, this can be done automatically.

Examples

Conjunctions of fixed predicates as analysis domain

(an example of Independent Attribute Analysis)

Definition of the domain

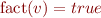

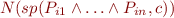

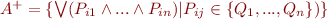

We have some predicates  and a set of formulas

and a set of formulas  . Let us observe that

. Let us observe that  (the height of a tree representing a formula), and

(the height of a tree representing a formula), and  (the number of possible formulas).

(the number of possible formulas).

Normalization operator

We define  a normalization function (look at the General Setup at the top of the page for more details). We will look at two possible ways to construct it for our example.

a normalization function (look at the General Setup at the top of the page for more details). We will look at two possible ways to construct it for our example.

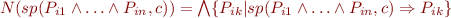

First idea, computing abstract postcondition

We want to compute  . In other words, we want to find some predicates that are true after conjunction of some predicates

. In other words, we want to find some predicates that are true after conjunction of some predicates  and command

and command  . We use the following construction:

. We use the following construction:

To understand why this is indeed a normalization, notice that even though  , we're computing a conjunction on predicates which are implied by the postconditions and therefore, this conjunction is also

, we're computing a conjunction on predicates which are implied by the postconditions and therefore, this conjunction is also  .

.

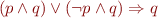

(Alternatively, we can use weakest preconditions and write  .)

.)

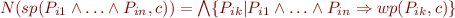

Second idea

is still a normalization function. We have

is still a normalization function. We have  and we compute

and we compute  such that

such that  is the strongest possible. If we are trying to find strongest precondition, then we would compute

is the strongest possible. If we are trying to find strongest precondition, then we would compute

The intuition here is really to take all possible formulas in the simplified language which are implied by the formula to be normalized. To avoid weaker formulas, we take conjunction.

Note

We may want to add the predicate  . Notice that if

. Notice that if  holds at any given point, then every property following will still hold. In other words, if you have some unreachable code in a program, then everything that follow will still be unreachable.

holds at any given point, then every property following will still hold. In other words, if you have some unreachable code in a program, then everything that follow will still be unreachable.

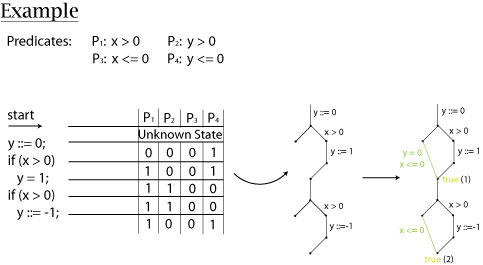

Example

Consider the following (embarrassingly simple) program:

y := 0 if(x > 0) y := 1 if(x > 0) y := -1

The following schema shows several things;

- For all the predicates which can hold for this program (using

,

,  and omitting

and omitting  ), the table on the left shows which ones hold for a given program state. 1 means the predicate holds

), the table on the left shows which ones hold for a given program state. 1 means the predicate holds - The two graphs on the right are flow-graphs, where statements are represented as edges and states as vertices (nodes). On the second one, annotations in colours represent predicates (while the black text still mark statements).

Looking at the program, one should be able to state that at its end,  will always be

will always be  . However, the conclusion reached at the end of the analysis is

. However, the conclusion reached at the end of the analysis is  , in other words, it tells us “the predicate

, in other words, it tells us “the predicate  is always true at the end of the program”. In yet other words, this tells us nothing interesting. Why the difference?

is always true at the end of the program”. In yet other words, this tells us nothing interesting. Why the difference?

Someone looking at the program will certainly notice that the one place where  is assigned to

is assigned to  will have no effect on the final result. The reason is that the assignment is “canceled out” by another one which occurs for the same condition,

will have no effect on the final result. The reason is that the assignment is “canceled out” by another one which occurs for the same condition,  , and

, and  doesn't change in between.

doesn't change in between.

The fact that we only used conjunctions is our analysis makes it path-insensitive. Intuitively, since we're only taking conjunctions, our normalization is too conservative and we will not be able to retain properties associated to certain paths. Path-sensitivity is often used as a measure of the precision of an analysis.

Disjunctions of conjunctions of predicates

(an example of Dependent Attribute Analysis)

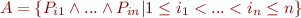

As we just saw, our analysis domain turned out to be too restrictive. We may want to use a more extended one. Let's define a new set of formulas:

The use of disjunctions allows us to write predicates about attributes for some particular paths. For this reason, this is called relational (or dependent) attribute analysis.

If we go back to our previous example, we could end up with a predicate at the final point which would look (at least partially) somehow like:

…where the first part represents the conclusions which can be made if the branches  were taken and the second the ones when they weren't. From there we'd find that

were taken and the second the ones when they weren't. From there we'd find that  always holds. (Note that this is informal and was not done in class.)

always holds. (Note that this is informal and was not done in class.)

The normalization function now looks like:

While this construction is valid mathematically speaking and it allows us to express more properties on programs, we have a problem with the exponential growth of the size of  , and the height of the formulas

, and the height of the formulas  . Consequently, we need some optimizations. For instance, we could do simplifications such as:

. Consequently, we need some optimizations. For instance, we could do simplifications such as:

For mode details, see the papers.

Note: There is a tradeoff between precision and efficiency.

More in

- predicate abstraction (Cartesian abstraction)

Linear inequalities

(section not covered in class)

Example implementation: Parma Polyhedra Library and a paper about Parma. Geometric interpretation of linear equations.

Special cases:

- constant propagation

- interval analysis

- octagon domain

Simple pointer analysis

All we have seen so far was about simple values. In complex programs we have runtime effects, e.g. pointer.

In fact, it can happen that two variables with different names (aliases) represent the same object (in memory). Take the following example:

C code: (x and y are two pointers already allocated) *x = 3; *y = 2;

The behavior of this program will strictly depend on the fact that pointers  and

and  are not pointing on the same memory address (so, in the code, there is no such statement

are not pointing on the same memory address (so, in the code, there is no such statement  ). The semantic that we have used since now doesn't allow to correctly handle those runtime effects.

). The semantic that we have used since now doesn't allow to correctly handle those runtime effects.

Consider constant field propagation with

x = new Node(); y = new Node(); x.f = 1; y.f = 2;

or with

y = x.next; x.f = 1; y.f = 2;

Allocation-site based analyses and logical representation of their abstract domains.