Informal Proofs and Mathematical Induction

Proof Rules

We next summarize proof rules that we can use to establish validity of formulas of first-order logic. We will keep these rules in mind for informal reasoning, but they can also be mechanized and we illustrate this fact in the Isabelle theorem prover. (Later we look at resolution procedure as an alternative to these proof rules.)

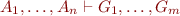

When proving a formula, we typically have a set of current assumptions and a goal. Moreover, we will consider the possibility of having multiple alternative goals, to explore multiple possible proofs at the same time. A state of our proof attempt is therefore given by a sequent of the form

where  are assumptions and

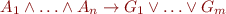

are assumptions and  are goals. The meaning of a sequent is the implication formula

are goals. The meaning of a sequent is the implication formula

We admit the case of no assumptions ( ) in which the left hand side is true and the case of no goals (

) in which the left hand side is true and the case of no goals ( ) in which case the right-hand side is considered to be false. (You can think of one of the assumptions always being the trivial assumption true, and one of the goals always being the trivially unprovable goal false.)

) in which case the right-hand side is considered to be false. (You can think of one of the assumptions always being the trivial assumption true, and one of the goals always being the trivially unprovable goal false.)

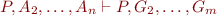

The rules below will have the form

where  are sequents. The meaning of each rule is that if we establish sequents

are sequents. The meaning of each rule is that if we establish sequents  then we can derive sequent

then we can derive sequent  . In other words, if we wish to prove sequent

. In other words, if we wish to prove sequent  , it suffices to prove sequents

, it suffices to prove sequents  . The rules below allow us to eliminate propositional connectives in valid formulas until we obtain sequents of the form

. The rules below allow us to eliminate propositional connectives in valid formulas until we obtain sequents of the form  , which is a trivially valid sequent because one of its goals appears as an assumption.

, which is a trivially valid sequent because one of its goals appears as an assumption.

To make rules concise we avoid writing common assumptions and conclusions. We assume that we can apply each rule in a context: we can introduce any set of assumptions simultaneously to all left-hand sides of a rule. Similarly, we can introduce another set of assumptions to all right-hand sides of the sequents in the rule. We ignore the ordering of assumptions and goals, because they are connected by associative and commutative logical operations.

Below we present one rule for each logical connective ( ,

,  ,

,  ,

,  ) and quantifier (

) and quantifier ( ,

,  ). For each of these construct we show how to prove the sequent when the formula with the connective is on the left-hand side and when the formula with the connective is on the right hand side. The English comment is just to aide some intuition and is by necessity imprecise.

). For each of these construct we show how to prove the sequent when the formula with the connective is on the left-hand side and when the formula with the connective is on the right hand side. The English comment is just to aide some intuition and is by necessity imprecise.

Conjunction-Left

If we can prove something from two assumptions, we can also prove it from their conjunction.

Conjunction-Right

To prove a conjunction, prove each conjunct.

Disjunction-Left

To prove something from a disjunctive assumption, prove it by case analysis, one case for each disjunct.

Disjunction-Right

To prove a disjunction try to prove one of the disjuncts.

Implication-Left

To prove something from an implication, prove it from its conclusion and also prove the premise.

Implication-Right

To prove an implication, assume its premise and prove its goal.

Negation-Left

Instead of proving a goal, you can assume its negation and prove any remaining goals (this generalizes proof by contradiction).

Negation-Right

If you prove one of the goals, you can assume its negation and prove remaining goals.

Forall-Left

If you have a universally quantified assumption, you can instantiate universal quantifier with any value, denoted by term t (this is what “for all” means). You keep the original quantified assumption in case you need to instantiate it multiple times.

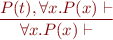

Forall-Right

To prove a universally quantified goal, prove the goal for an arbitrary element, denoted by fresh variable  . Informally we would say “let

. Informally we would say “let  be arbitrary…”.

be arbitrary…”.

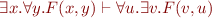

Exists-Left

When you have an existentially quantified assumption, you can pick a witness for existential statement and denote it by fresh variable “x_0”. Informally we would say “let  be such

be such  ”.

”.

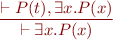

Exists-Right

To prove an existentially quntified statement, supply a witness for the existential quantifier, given by value of some term t. Keep the original existential quantifier in case this was not the right instantiation.

Example

Let us prove the following formula:

Using Proof Rules in Isabelle

Isabelle theorem prover implements these rules. It names them:

- conjE, conjI, disjE, disjI1, disjI2, impE, impI, allE, allI, exE, exI

The “E” (elimination) rules are for constructs on left-hand side, and “I” (introduction) rules are for constructs on right-hand side.

More information: Isabelle tutorial, Chapter 5 “The Rules of the Game”.

Demo: Proving above example in Isabelle. A working Isabelle script:

theory ForallExists imports Main begin lemma fe1: "(EX x. ALL y. F x y) --> (ALL u. EX v. F v u)" apply (rule "impI") apply (rule "allI") apply (erule "exE") apply (erule "allE") apply (rule "exI") apply assumption done lemma fe2: "(EX x. ALL y. F x y) --> (ALL u. EX v. F v u)" apply auto done end

Completeness for First-Order Logic

Completeness theorem: If a first-order logic formula without equality is valid, it can be shown valid using the above rules.

- Gallier Logic Book, Section 5.4 page 187+23, Section 5.5

We will later introduce a different proof system (resolution), for which we will prove completeness.

Proving Universally Quantified Statements by Mathematical Induction

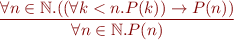

Stepwise Induction

Total Induction

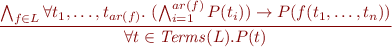

Structural Induction

Stepwise induction allows us to prove properties of natural numbers by proving that it holds for 0 and that it extends with each successor. Structural induction similarly allows us to prove that if property holds for basic structures and that if it holds for smaller structures then it also holds for larger structures. Examples of such structures are terms or expression trees.

Consider a language  of function symbols. Let

of function symbols. Let  denote the number of arguments (arity) of a function symbol

denote the number of arguments (arity) of a function symbol  . Let

. Let  denote the set of all terms in this language. To prove a property for all terms, we show that if the property holds for subterms, then it holds for the term built from these subterms.

denote the set of all terms in this language. To prove a property for all terms, we show that if the property holds for subterms, then it holds for the term built from these subterms.

Note that if  is a constant we assume

is a constant we assume  . Therefore, structural induction requires us to prove

. Therefore, structural induction requires us to prove  for all constants

for all constants  .

.

- Calculus of Computation Textbook, Chapter 4 (some example refer to previous chapters, if you do not understand them, ignore them for now)